The Old Ruminator

Well-known member

I live in a world where its now hard to define what is real anymore. I have had numerous calls from "my bank " this week telling me that my account had been hacked ( my mate has just lost £7,000 but got it refunded ). Yesterdays call asked for me by name and named my bank. Other calls have my local code. I was so worried that I paid the bank a visit. Yes all scams and I was told to ignore everything. So how do I know a real one ?

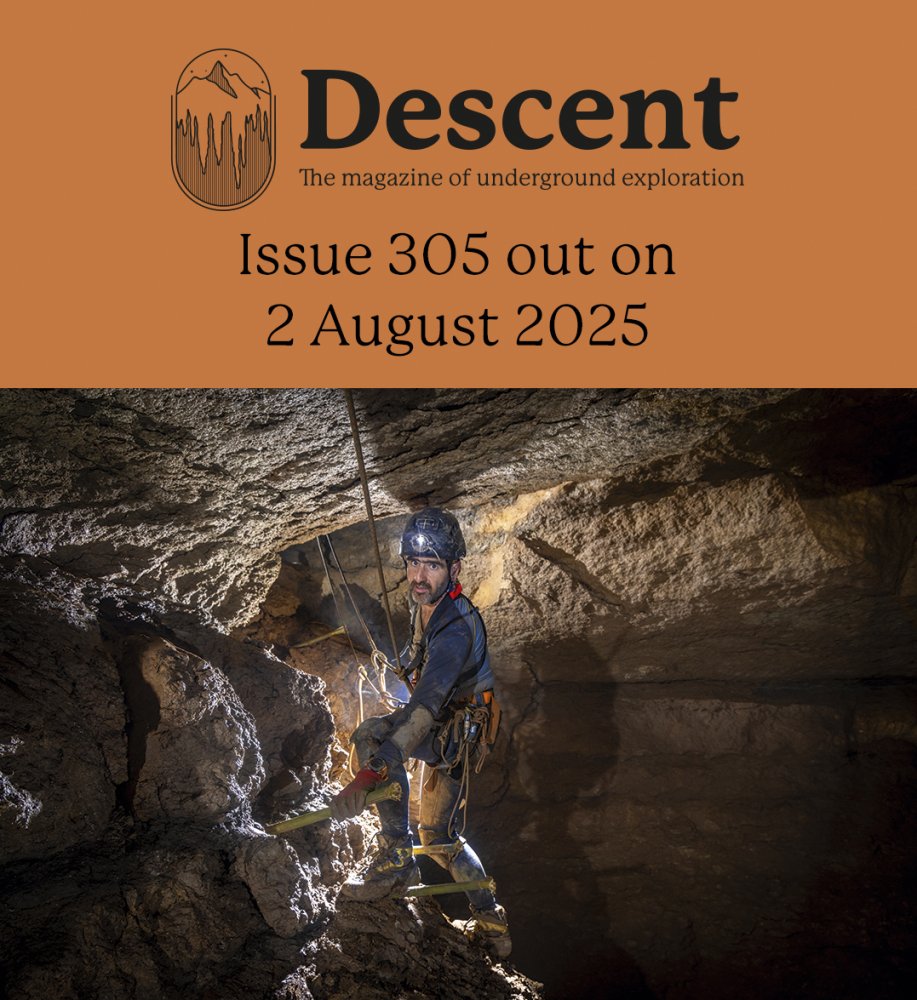

So onward to photographs and a similiar trend. The internet is awash with fake images from Hubble etc such as this one.

Total nonsense of course but the trend is now spreading to caving images. Some are so perfect in every way that they cannot be "real ". Rather like Chat GBT text which when examined as student test results the majority were not picked up. So we say " wow " or that dreadful word " stunning " when seeing some of these fantastic caving images. Sadly they are quite that but also utterly false. I do minimal editing and dont shoot in RAW. Why push the image beyond the bounds of reality ? Of course I cant show specific caving images here without upsetting somebody but you will recognise them as the pop up on social media. So how far do we go with it ? Ultimately the camera may decide as they become more intuitive and start to reflect the way the images are presented. The AI will learn what you like best to see and give you that result.

So onward to photographs and a similiar trend. The internet is awash with fake images from Hubble etc such as this one.

Total nonsense of course but the trend is now spreading to caving images. Some are so perfect in every way that they cannot be "real ". Rather like Chat GBT text which when examined as student test results the majority were not picked up. So we say " wow " or that dreadful word " stunning " when seeing some of these fantastic caving images. Sadly they are quite that but also utterly false. I do minimal editing and dont shoot in RAW. Why push the image beyond the bounds of reality ? Of course I cant show specific caving images here without upsetting somebody but you will recognise them as the pop up on social media. So how far do we go with it ? Ultimately the camera may decide as they become more intuitive and start to reflect the way the images are presented. The AI will learn what you like best to see and give you that result.